Brian Nosek's team set out to replicate scores of studies.

Brian Nosek's team set out to replicate scores of studies.

Don’t trust everything you read in the psychology literature. In fact, two thirds of it should probably be distrusted.

In the biggest project of its kind, Brian Nosek, a social psychologist and head of the Center for Open Science in Charlottesville, Virginia, and 269 co-authors repeated work reported in 98 original papers from three psychology journals, to see if they independently came up with the same results.

The studies they took on ranged from whether expressing insecurities perpetuates them to differences in how children and adults respond to fear stimuli, to effective ways to teach arithmetic.

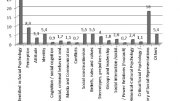

According to the replicators' qualitative assessments, as previously reported by Nature, only 39 of the 100 replication attempts were successful. (There were 100 completed replication attempts on the 98 papers, as in two cases replication efforts were duplicated by separate teams.) But whether a replication attempt is considered successful is not straightforward. Today in Science, the team report the multiple different measures they used to answer this question.

The 39% figure derives from the team's subjective assessments of success or failure (see graphic, 'Reliability test'). Another method assessed whether a statistically significant effect could be found, and produced an even bleaker result. Whereas 97% of the original studies found a significant effect, only 36% of replication studies found significant results. The team also found that the average size of the effects found in the replicated studies was only half that reported in the original studies.

There is no way of knowing whether any individual paper is true or false from this work, says Nosek. Either the original or the replication work could be flawed, or crucial differences between the two might be unappreciated. Overall, however, the project points to widespread publication of work that does not stand up to scrutiny.

Although Nosek is quick to say that most resources should be funnelled towards new research, he suggests that a mere 3% of scientific funding devoted to replication could make a big difference. The current amount, he says, is near-zero.

Replication failure

John Ioannidis, an epidemiologist at Stanford University in California, says that the true replication-failure rate could exceed 80%, even higher than Nosek's study suggests. This is because the Reproducibility Project targeted work in highly respected journals, the original scientists worked closely with the replicators, and replicating teams generally opted for papers employing relatively easy methods — all things that should have made replication easier.

Source: www.nature.com

You might also like:

There's the

Headliner, the deck, the lead, and the actual story itself.

There is also the six questions

What, When, Where, Who, Why and How.

The article should always answer these questions to be relevant.